Mar 20 Research Wrapup

Paper Summaries:

- Internet-augmented language models through few-shot prompting

for open-domain question answering

- Deepmind: Condition GOPHER models on text retrieved from google searches

- Achieve improvements in Open Domain Question Answering

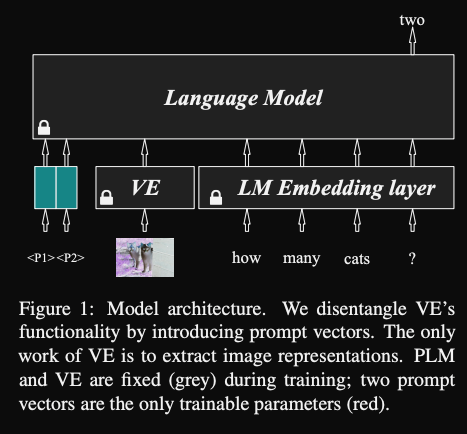

- Modular and Parameter-Efficient Multimodal Fusion with Prompting

- Use prompting to promote alignment of VIT and BART models

- Prompt Tuning with only a small number of trainable parameters

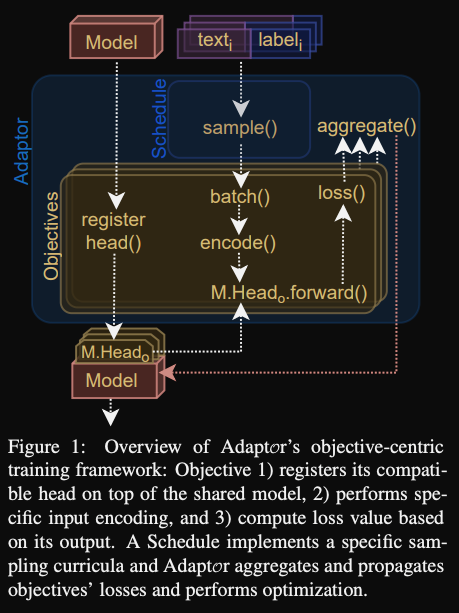

- AdaptOr: Objective-Centric Adaptation Framework for Language Models

- framework for multiobjective training

- customize data sampling schedules

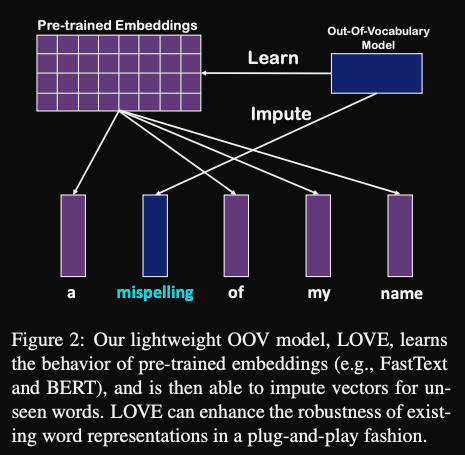

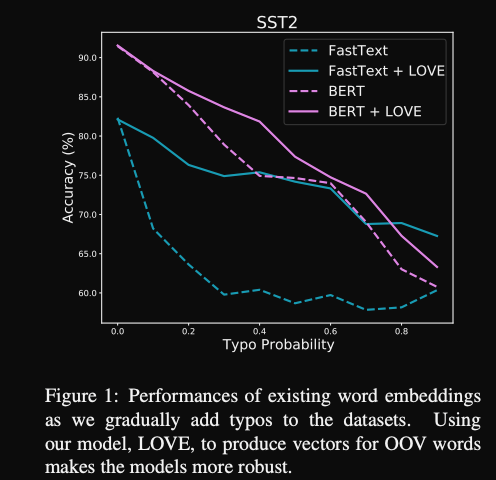

- Imputing Out-of-Vocabulary Embeddings with LOVE Makes Language

Models Robust with Little Cost

- train a lightweight model to infer embeddings of OOV words

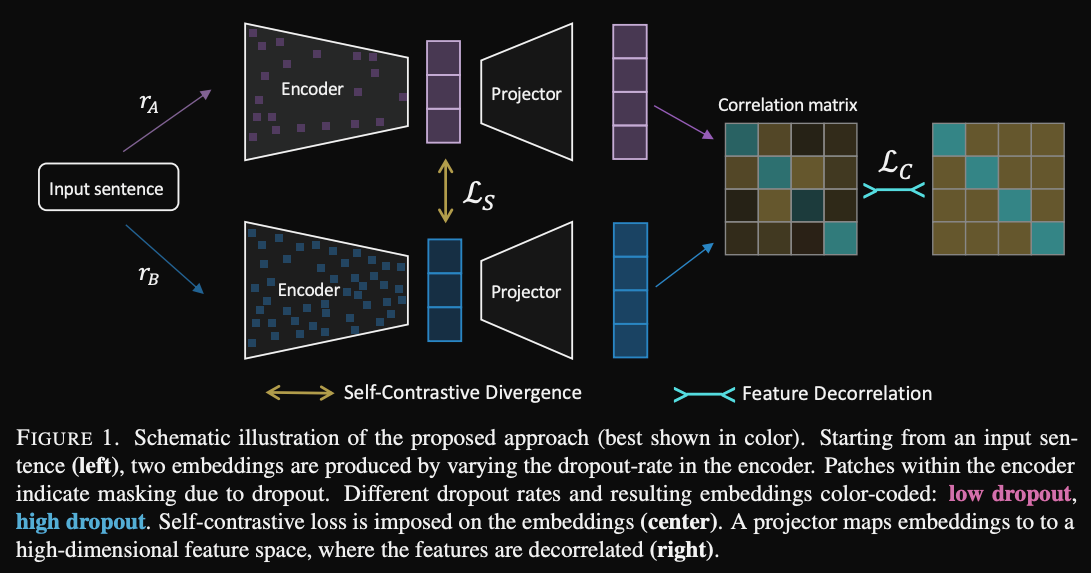

- SCD: Self-Contrastive Decorrelation for Sentence Embeddings

- method for self-supervised learning of sentence embeddings without contrastive pairs

- Does not outperform SIMCSE (or promptBERT or DCPCSE)

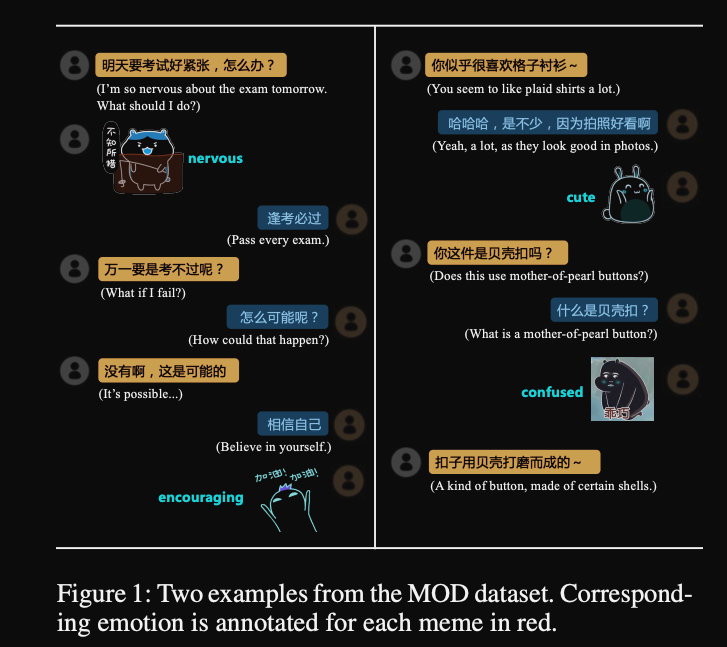

- Towards Building an Open-Domain Dialogue System

Incorporated with Internet Memes

- Can we train a language model to understand and make use of memes

- Yes with meme retrieval and meme emotion classification models